Content

Stay up to date on the latest happenings in digital marketing

Most marketers waste money on ads that do not drive results—but they do not realize it. Are your marketing efforts truly working, or would customers have converted anyway? In this article, we will explore incrementality testing: what it is, how to get started, and the challenges you need to prepare for.

By the end, you will know how to measure the real impact of your ads, optimize your budget, and make data-driven insights that fuel better marketing decisions.

Incrementality testing: understanding the basics and main types

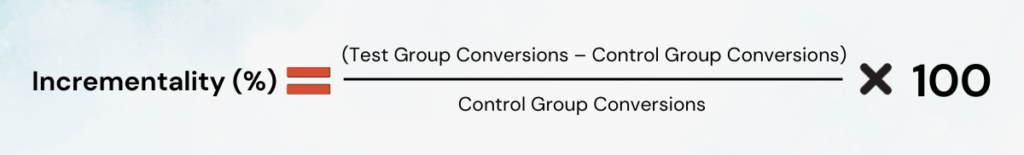

Incrementality testing measures how much a marketing effort drives real results. It lets you compare a test group (exposed to ads) and a control group (not exposed). The formula is simple:

To better understand the incrementality testing process, here’s an overall overview:

- Plan a campaign

- Identify your target audience or consumers

- Randomly split them into a test group and a control group

- Run the campaign, with ads going to the test group but NOT to the control group

- Observe your key metrics from both groups

- Calculate the impact

For example, let’s say you are in the sports niche and sell golf cart accessories. You run a mobile FB ad campaign for golf cart steering wheels and seat kits. Then, you show ads to one group of golf cart owners (test group) while another similar group (control group) sees no ads.

After two weeks:

- Test group: 350 purchases

- Control group: 280 purchases

Using the formula, here’s the answer:

This means your Facebook mobile ads increased sales by 25%, proving your marketing effort drove real demand.

Why does this matter?

- It guides better budget allocation by showing which campaigns truly drive new revenue.

- It helps you stop over-crediting ad platforms for sales that would have happened anyway.

- It reveals the hidden value in underused media channels. For example, maybe your Google Ads seem to generate more conversions, but incrementality testing might show that your TikTok or YouTube ads drive new customers, not just repeat buyers.

To understand incrementality testing better, here are its different types:

A. Holdout group testing

Split your audience into test and control groups—the test group sees your ads, the control group does not. It helps provide insights into whether a marketing intervention (like an in-app ad or mobile push notification) truly changes user behavior.

If the control group converts at the same rate as the test group, your ads are not driving real business outcomes—you are just spending for nothing.

When should you use it?

Use Holdout Group Testing when you need clear proof of impact before increasing ad spend. It is ideal for app install campaigns, in-app purchase promotions, or mobile retargeting, especially when testing new media channels or adjusting your marketing mix.

B. Geo-based testing

Geo-based testing runs ads in specific geographic regions while keeping others ad-free. You then compare performance data between these locations to measure incrementality—if sales or app installs rise only in ad-exposed areas, your advertising efforts are making an impact.

Why is this important?

Most mobile marketing strategies rely on user tracking, but privacy updates (like iOS ATT) make that unreliable. Geo-based testing solves this by focusing on regions instead of individual users.

When should you use it?

Use geo-based testing when expanding into new locations, or testing localized advertising efforts. Suppose you run a local service-based business like Chicago-based window-washing company looking to attract more high-rise clients.

Run mobile ads targeting condo owners in Wicker Park, but keep Lincoln Park ad-free. If bookings spike only in Wicker Park, your ads are working. But if both areas see similar requests, your advertising efforts are not driving new demand.

C. Intent-to-treat (ITT) testing

ITT testing assigns users to test and control groups, but unlike other methods, some in the control group may still see the ads organically. This mirrors real-world conditions and helps measure incrementality more accurately.

Why this?

Mobile users engage with ads across multiple platforms, which makes strict ad exposure control unrealistic. ITT testing accounts for cross-platform spillover—for example, a user assigned to the control group may still see your ad on another particular channel like TikTok.

This method prevents inflated incremental value estimates by reflecting how ads influence a target audience in a fragmented digital space.

When should you use it?

Use ITT testing when running a multi-platform marketing campaign or testing high-reach mobile ads.

D. Time-based testing

Time-based testing is fairly simple.

Essentially, you start a new campaign or add a new partner to your marketing mix. Importantly, you keep this as the only major change (and ideally minor as well) that you make to your advertising and marketing campaigns. Then you compare before, during, and after conditions for conversions, downloads, sales, or whatever your metrics are.

If you see uplift, and the new campaign was the only change, it’s likely incremental.

Note that a lot of changes can happen that are not part of your marketing mix, however that could impact your incrementality testing: holidays, world events, weather events, competitor actions, App Store algorithm changes, and more …

E. Other incrementality methods

There’s also ghost ads, or shadow testing, and synthetic control testing. Check those out if you’re not getting great results with the other methodologies.

Now, measure incrementality

How do you gather and compile your marketing data without unnecessary hassle?

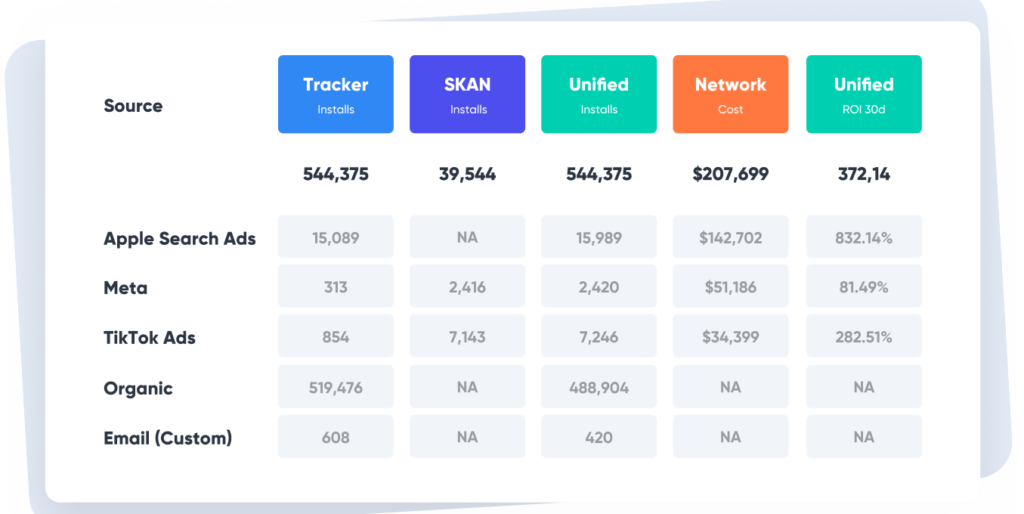

Use Singular’s marketing analytics tool to bring all your mobile marketing data into one easy-to-read report. See exactly where your ad spend is going, how users engage across devices, and what’s actually driving results.

With ready-made reports and automatic data transfers, you can measure incremental impact without digging through spreadsheets or manually matching data sources.

To make sure your findings or interpretations are always accurate, hire a data analyst. They can help you spot patterns you might miss, clean up messy data, and make sure your results truly reflect incremental impact.

Incrementality testing 101: how to launch

Follow these best practices to set up a reliable test, measure true impact, and ensure your marketing investments drive real, data-backed growth.

1. Set clear goals to prove real advertising impact

Without clear goals, incrementality testing becomes guesswork. You might find a lift in conversions, but does it mean your marketing budget was well spent? Or did your customers convert anyway?

Set the right goals to make sure your marketing measurement focuses on real impact rather than misleading vanity metrics.

What To Do

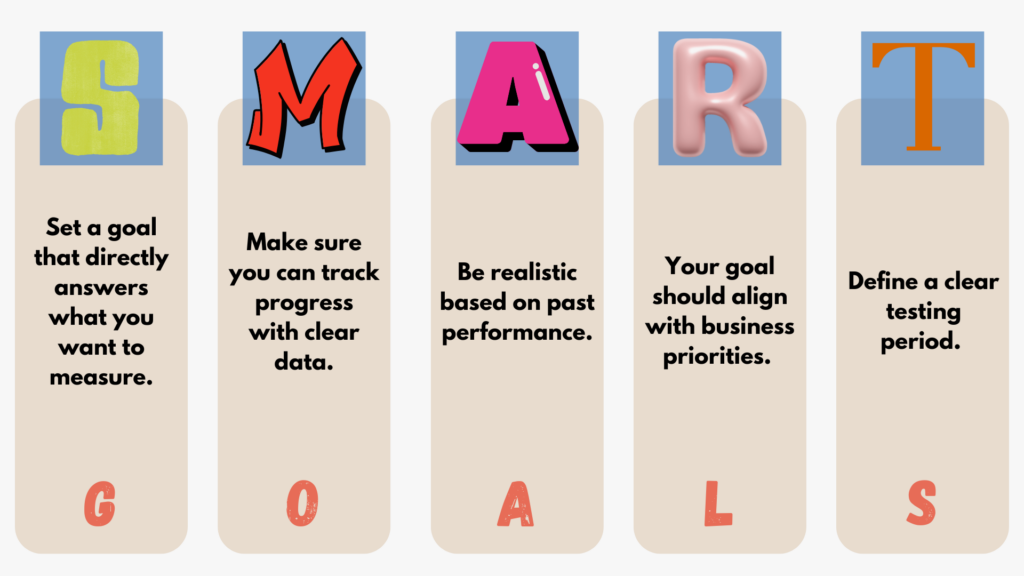

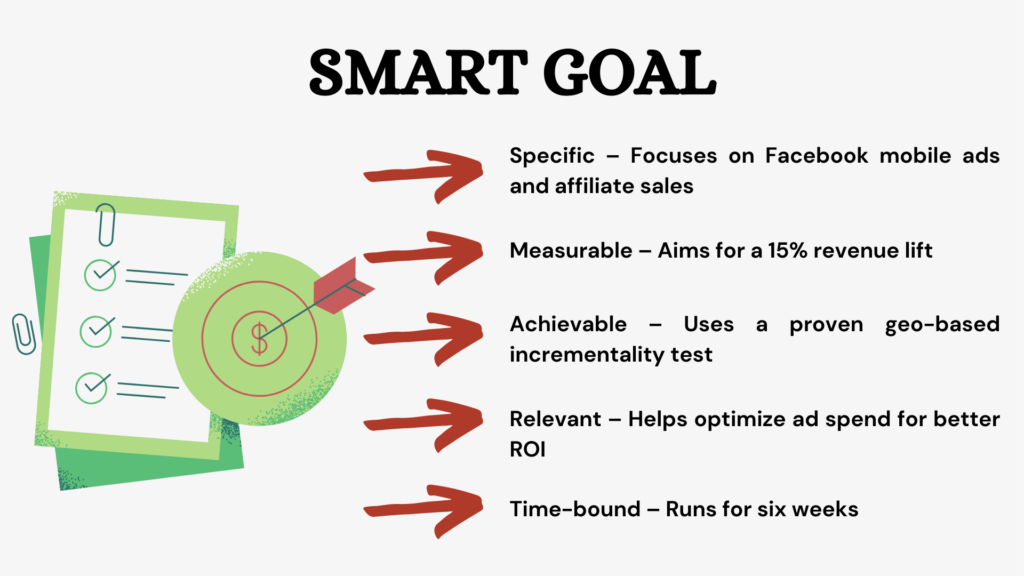

Use the SMART framework:

Suppose you are a resource website like an expert-led review site.

Here’s what your SMART goal can look like:

- Determine whether Facebook mobile ads drive new affiliate sales for high-end mattresses by running a geo-based incrementality test in two cities. Measure incremental revenue lift of at least 15% in the test group over six weeks, using affiliate link tracking and Google Analytics to compare conversions between regions.

How is this SMART?

Here are more reminders to set clear goals:

- If your ads run across multiple channels, set goals that measure each platform’s unique contribution to avoid misattributing results.

- If growth matters most, focus on customer acquisition. If you want to know how well you are retaining customers, measure repeat purchases in the treatment group.

2. Measure incremental lift without external factors

External factors, like seasonality, can skew your results, making an ad campaign seem more effective than it really is. Think of running mobile ads for premium headphones during the holiday season. Sales might skyrocket, but how much of that is from your ads versus holiday shopping trends or site-wide discounts from third-party retailers like Amazon?

If you do not account for seasonal trends and shifts in consumer behavior, you risk inflating your incremental impact and making budget decisions based on misleading data.

What to to

Compare against your historical data to see if similar trends happened without ads, which can help you isolate the real impact of your campaign. Also, avoid launching incrementality tests during major sales seasons unless you also test in low-demand periods for comparison.

You should also use multiple control groups. To do this, split your audience across different regions or demographics to get valuable insights into how external factors influence results.

Suppose you are running mobile ads for a sleep-tracking app. Instead of using just one control group, split your audience by region and lifestyle:

- Remote workers in Colorado

- Busy professionals in New York

This helps you see if external factors like work schedules or climate impact app adoption, giving you more accurate insights.

Additionally, test your marketing collateral separately. To do this, individually test your video ads, digital content, and influencer campaigns to know which avenue is truly driving conversions.

3. Set a fixed testing period to avoid chasing false success

Running an incrementality test without a fixed timeline is like checking your fitness progress after one workout—it will not tell you much.

If you measure results too early, temporary spikes in conversions can mislead you into thinking your ads worked. If you wait too long, external factors like competitor promotions or seasonal trends can distort your results.

Meanwhile, a fixed testing period keeps short-term ad hype from looking like real growth. It helps calculate incrementality by making sure weekend shopping surges, payday spikes, or sudden influencer buzz do not inflate results.

What to do

Match test length to customer buying cycles. So, if your product has a longer decision-making process, extend your test period to capture delayed conversions.

For example, if you have a service-based business like online guitar courses, most buyers do not sign up immediately—they research options, watch free lessons, and compare instructors before committing. Instead of testing for just a week, run your incrementality test for at least 30 days to capture those delayed conversions from serious learners.

Another best practice for setting a fixed testing period is to avoid running tests during major marketing activities. For example, if you are launching a new product or running a seasonal promotion, hold off on testing to prevent inflated results.

You should also review past campaign effectiveness trends to set a duration that aligns with natural customer behavior. Lastly, stick to a consistent time frame for comparability. If you run tests with inconsistent timelines, it makes it harder to compare results across different campaigns.

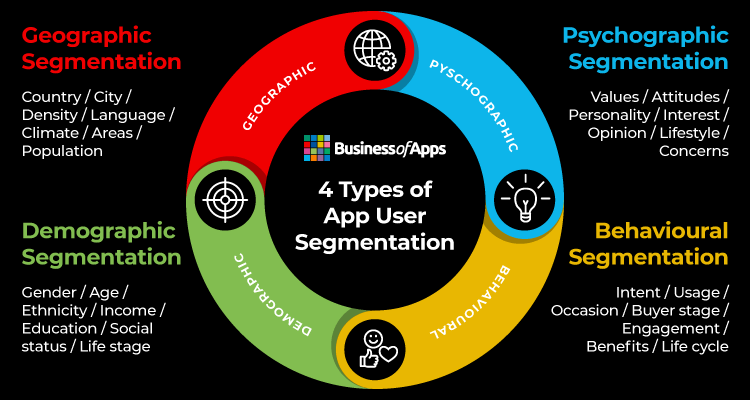

4. Test different mobile app user segments

Not all mobile app users behave the same way, and running a single test for all users can hide what is actually working. For example, new users might respond better to content marketing, while returning users convert through retargeting ads.

But by testing different segments, you can see which advertising channel drives the most incremental impact at each stage of the customer journey.

What to do

Segment based on user behavior. Split your users into distinct groups, like first-time visitors, frequent users, and inactive users. For example, if you have a fitness app, new users might engage more with free trial offers, while long-time users may respond better to loyalty rewards. By testing ad strategies separately for each segment, you can avoid wasting ad spend on users who would have converted anyway.

Test age-specific responses. Older adults interact with apps differently than younger users. For example, if you are marketing a health-tracking app, elderly users will respond better to ads with easy-to-read displays and simplified navigation.

This is especially important as 25% of seniors experience visual impairment, which can make small text and complex interfaces frustrating to navigate. Ensuring your app highlights features like large fonts, high-contrast colors, and voice assistance in your ads can improve engagement and drive real conversions from this segment.

You should track incrementality at different stages of the customer journey too. So run separate tests for users who just installed the app, those who used it for a month already, and long-time users to identify where ads have the most impact.

5. Measure across different mobile platforms

An ad can show high conversions on Android, but if iOS users would have converted without the ad, your marketing performance is not as strong as it seems.

Why is that?

Because incrementality testing is not just about conversions, it is about proving the ad caused the conversion.

If an ad shows high conversions on Android, but iOS users—who never saw the ad—converted at a similar rate, it suggests the ad didn’t truly drive demand. The sales might have happened anyway, meaning your marketing performance is inflated, and you are spending on ads that do not add real value.

What to do

Compare marketing channels for each platform. For example, ads on Facebook or TikTok might drive more incremental conversions on iOS, while Google Ads could perform better on Android—test each separately.

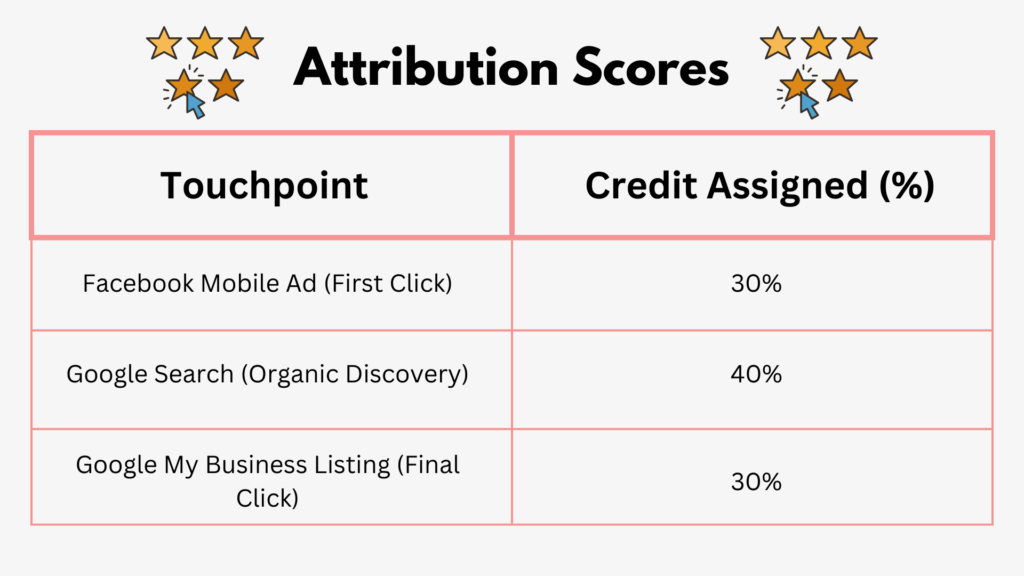

In addition, analyze multi-touch attribution, which is a marketing measurement method that assigns credit to each touchpoint in the customer journey to show how different channels contribute to a conversion.

MTA helps you determine which of these touchpoints had the biggest impact so you know where to focus your marketing spend for the best return.

For example, if you offer local SEO services, a business owner might:

- See your mobile ad on Facebook (first exposure).

- Search for “best local SEO agency” on Google (engagement).

- Tap your Google My Business (GMB) listing and call you (conversion).

For your model, you can assign credit like this:

Once you identify which platform drives more true incremental conversions, adjust your budget to maximize performance.

3 Hurdles in incrementality testing (and how to overcome them)

Review your latest advertising campaign and spot where your incrementality testing might be falling short. Fix these hurdles now to get accurate results and optimize your budget.

A. No clear baseline for mobile user behavior

Without knowing how users typically interact with your app before ads, you cannot tell if an increase in conversions comes from your campaign or just normal user activity. For example, if your app sees a natural weekly surge in downloads, running a test during that time without a proper baseline will inflate your results.

Here’s how to get a clear baseline before you run a test:

- Run a pilot test in one city or audience group before scaling.

- Match test timing with normal activity cycles. Do not test only during high-traffic seasons.

- Define treatment and control groups early. Segment users before running ads to avoid biased test results.

- Track user-level data for at least 30 days. Measure app installs, in-app actions, and purchases before running any test.

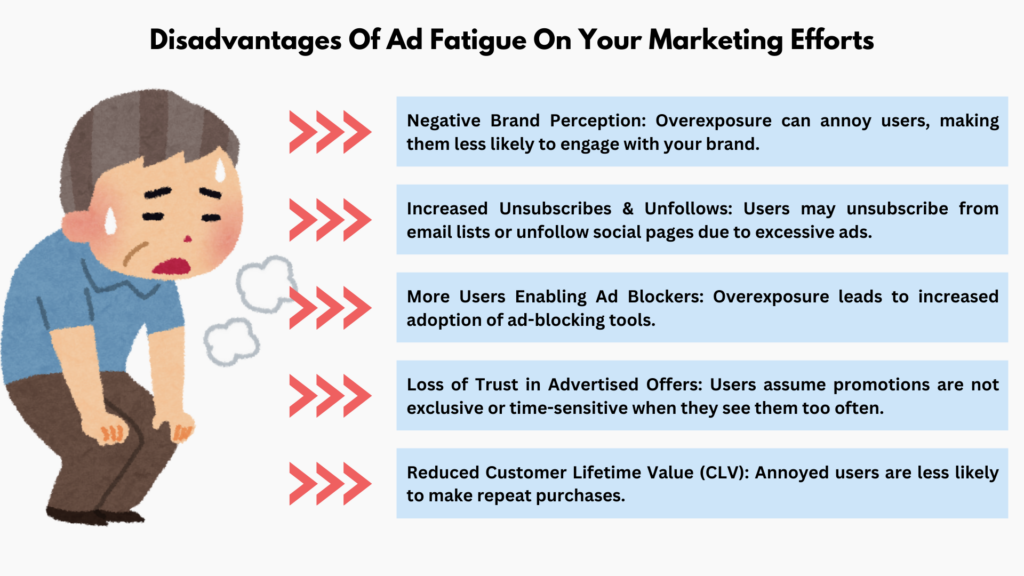

B. Ad fatigue on mobile

Ad fatigue happens when users see the same ad too many times. If your incrementality test runs too long or serves ads too frequently, users might start ignoring or skipping them, making your campaign performance look worse than it actually is.

For example, if a mobile user sees your ad five times a day, their likelihood of clicking drops sharply, and your results may underestimate your true incremental impact.

Here’s how to avoid this:

- Use dynamic ad creatives. Personalize ads based on user behavior to prevent repetitive messaging.

- Limit ad frequency per user. Set a cap on how often a single user sees your ad in a day or week.

- Rotate ad creatives every two weeks. Swap in fresh visuals, messaging, or formats to keep engagement high.

- Expand to multiple ad formats. Test video, carousel, and interactive ads to keep things fresh.

C. Overlapping mobile campaigns

If you are testing a Facebook ad campaign while also running Google UAC ads, how do you know which one is actually driving conversions? Overlapping campaigns blur results, making it impossible to tell if an increase in installs, purchases, or engagement comes from a specific ad or the combined effect of multiple ads.

Here’s how you can avoid this:

- Adjust bidding strategies to prevent overlap and guarantee your ads on different platforms aren’t competing for the same users.

- Keep track of launch dates to prevent overlapping test periods. To do this, map out all active and planned campaigns.

- Review past data before launching new tests. Learn from previous overlaps to improve future campaign planning.

- Run sequential, not simultaneous, tests. Test one variable at a time before layering in new campaigns.

Conclusion

Avoid assumptions; test everything. With incrementality testing, you can validate your marketing efforts without inflating results. Meet with your team to identify weak spots in your measurement approach.

Are your treatment and control groups properly set up? Are you accounting for external factors? Then, review the best practices that you can apply first.

But before all that, make sure you have clean data. Use Singular to collect and manage your marketing data in one place. Book a demo now and see how we can help you in action.